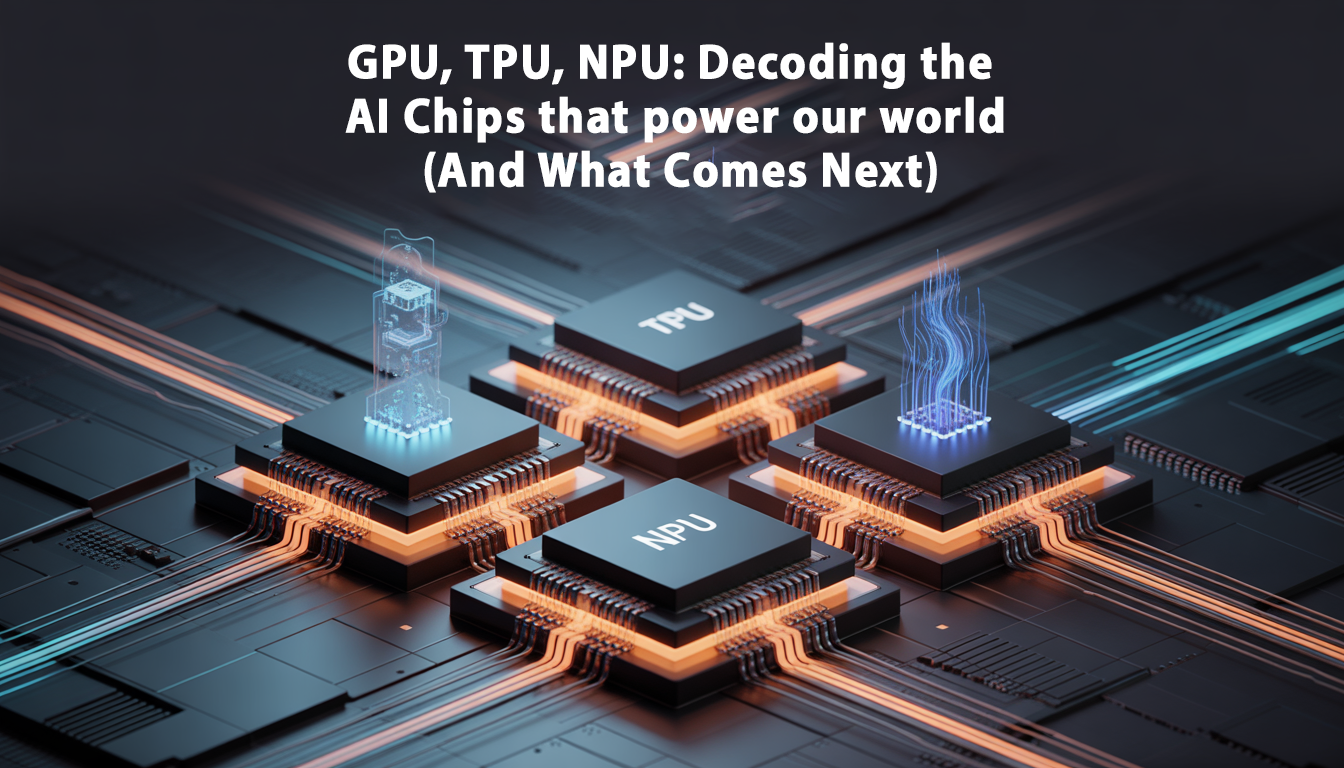

GPU, TPU, NPU: Decoding the AI Chips That Power Our World (And What Comes Next)

The AI revolution isn’t just about software—it’s a hardware battleground. We break down the specialized processors turning science fiction into reality.

Think of the world’s most powerful AI models—ChatGPT’s conversations, Midjourney’s art, or Tesla’s autopilot. Their “intelligence” feels like magic, but it’s powered by something very physical and meticulously engineered: specialized AI chips.

For decades, the CPU (Central Processing Unit) was the undisputed brain of every computer. But the explosive demands of artificial intelligence—trillions of mathematical calculations to train and run neural networks—created a bottleneck. The general-purpose CPU wasn’t enough. The industry responded not with one, but with a new generation of processors, each optimized for a specific facet of AI work.

Welcome to the era of heterogeneous computing, where different chips work in concert. Understanding the roles of GPUs, TPUs, and NPUs is key to understanding the present and future of technology. This isn’t just for engineers; it’s about knowing what’s inside the devices that are reshaping our lives.

Illustrative image AI / HackStec

1. The Workhorse: GPU (Graphics Processing Unit)

The Origin Story: The GPU was born to render video game graphics—a task requiring millions of parallel calculations to paint pixels on a screen. Engineers soon realized this “parallel architecture” was also perfect for another massively parallel task: the matrix multiplications at the heart of neural network training.

What it Excels At:

- Training Massive AI Models: This is the GPU’s crown. Chips from NVIDIA (like the H100) and AMD (MI300X) are the engines in data centers that spend weeks “teaching” models like GPT-4 on oceans of data.

- Parallel Processing: It has thousands of smaller, efficient cores designed to handle many calculations simultaneously, unlike a CPU’s fewer, more complex cores.

The Analogy: If the AI model is a student, the GPU is the intense, full-time university where it learns everything from vast libraries of information.

Illustrative image AI / HackStec

2. The Specialist: TPU (Tensor Processing Unit)

The Origin Story: Born at Google in 2015 out of necessity. Running AI services like Search and Translate on GPUs was becoming inefficient and expensive. Google designed the TPU from the ground up for one specific framework: TensorFlow (hence “Tensor” Processing Unit).

What it Excels At:

- Inference at Scale: While it can train models, the TPU truly shines at “inference”—the act of running a trained model to make predictions. It’s hyper-optimized for this, offering incredible speed and power efficiency.

- Precision & Speed: It uses lower precision calculations (like bfloat16) that are perfect for neural networks, trading absolute precision for blazing speed and lower power consumption.

The Analogy: Once the AI student has graduated, the TPU is the specialized, high-efficiency workplace where it applies its knowledge to billions of real-world tasks, extremely quickly and cost-effectively.

3. The Integrator: NPU (Neural Processing Unit)

The Origin Story: As AI moved from the cloud to our pockets, a new need emerged: a tiny, ultra-efficient AI accelerator that could run on a smartphone’s battery. Enter the NPU, designed to be a small core inside a main system-on-a-chip (SoC).

What it Excels At:

- On-Device AI (Inference): NPUs are everywhere now: in your iPhone (Apple’s Neural Engine), your Android phone (Qualcomm’s Hexagon), and your latest laptop CPU (AMD’s Ryzen AI, Intel’s NPU). They handle tasks like photo enhancement, live translation, and voice assistant wake words.

- Power Efficiency: Their primary design goal is to perform AI tasks while using a minuscule amount of power, preserving battery life.

The Analogy: The NPU is the AI’s built-in, instant reflex. It’s the specialized chiplet inside your device that handles intelligent tasks without needing to call the cloud, enabling real-time, private, and offline AI.

Illustrative image AI / HackStec

Head-to-Head: A Quick Comparison

| Chip | Best For | Where You Find It | Key Trait |

|---|---|---|---|

| GPU | Training large models, parallel compute | Data centers, gaming PCs, workstations | Raw parallel power |

| TPU | Cloud inference, TensorFlow tasks | Google Cloud servers, some research labs | Inference speed & efficiency |

| NPU | On-device inference, everyday AI | Smartphones, laptops, tablets, IoT devices | Extreme power efficiency |

What’s Next? The Future of AI Silicon

The race is just heating up. Here’s what the next generation holds:

- Chiplet Architectures: Companies like AMD and Intel are moving beyond single, monolithic chips. They’re designing “chiplets”—smaller, specialized blocks (one for CPU, one for NPU, one for memory) packaged together. This boosts performance and reduces cost.

- Photonic & Quantum AI Chips: Research is exploring using light (photons) instead of electricity for computation, promising massive speed-ups for specific AI tasks. Quantum co-processors for AI are also on the horizon.

- Neuromorphic Computing: This is a paradigm shift. Instead of simulating neural networks on traditional chips, these chips are built to physically mimic the brain’s structure (spiking neural networks), aiming for human-like efficiency in learning and sensing.

- Domain-Specific Architectures (DSAs): The trend is toward ultra-specialization. We’ll see chips designed exclusively for recommendation engines, autonomous driving perception, or protein folding in biology.

- The Software-Hardware Lock-In: The battle isn’t just about silicon. It’s about ecosystems. NVIDIA’s CUDA software locks users to their GPUs. Google’s TPUs are optimized for TensorFlow/PyTorch. Future success will hinge on who builds the most attractive full stack for developers.

Illustrative image AI / HackStec

Conclusion: The Right Tool for the Right Job

The story of AI chips is a move from generalization to specialization. The CPU is the versatile manager. The GPU is the brute-force training powerhouse. The TPU is the cloud inference specialist. The NPU is the ubiquitous, efficient on-device assistant.

For us as users and tech enthusiasts, this specialization means smarter, faster, and more private experiences. For the industry, it represents the most intense and strategic hardware battle in decades. The companies that design the best AI silicon won’t just sell chips—they will define the capabilities of the intelligent world being built on top of them.

Which of these AI chips do you think will have the biggest impact on everyday life in the next 5 years? Is it the data center GPU, the cloud TPU, or the humble NPU in your pocket? Let the debate begin in the comments below.

Publicar comentário